Multi-modal AI has taken a major leap forward this year, transforming how we interact with the Internet by combining text, images, audio, and video into seamless, intelligent experiences. From smarter virtual assistants that understand visual context to AI tools that generate rich media content from simple prompts, this advancement makes the web more intuitive, accessible, and dynamic.

But there’s a downside. CAPTCHA has long been a frontline security defense mechanism against bots. With rapid advancements in AI, including those in the area of multi-modal AI systems, CAPTCHA is quickly losing its effectiveness.

The Rise of Multi-Modal AI

To understand why CAPTCHA is failing, we must look at the evolution of AI. Traditional machine learning algorithms excel with certain tasks, such as recognizing patterns in numbers or predicting trends in datasets. However, modern AI systems, particularly multi-modal AI, are different. These systems can simultaneously process and understand various types of input such as images, text, speech, and video to perform more complex tasks.

Multi-modal AI systems solve CAPTCHAs quicker and easier than ever before. With the integration of visual recognition, AI can understand the letters, numbers, and images used in CAPTCHAs. AI that understands both image and text data can identify distorted letters in a visual CAPTCHA or solve audio challenges in CAPTCHA tests.

AI’s Advantage Over Traditional CAPTCHAs

CAPTCHAs were originally designed to exploit the gap between human cognitive capabilities and the limited processing ability of automated systems. This gap allowed CAPTCHAs to be an effective method to separate human users from bots. However, AI advancements have closed this gap, as CAPTCHAs no longer present a significant challenge to modern systems as evidenced by widely available, inexpensive, and effective services such as CapSolver and CaptchaAI.

Here are a few reasons why CAPTCHA has lost its edge:

1. Image Recognition

One of CAPTCHA’s most common formats is the visual puzzle, where users are asked to identify objects like cars, street signs, or fire hydrants in images. The assumption was that machines would struggle to understand images as well as humans. However, multi-modal AI can now analyze and interpret visual data with high accuracy. These models can outperform humans in tasks like image classification, allowing bots to bypass these challenges effortlessly.

A prominent example is the “click on all images with traffic lights” CAPTCHA. AI models trained on large datasets of labeled images can accurately detect traffic lights even in distorted or low-resolution images. Consequently, CAPTCHAs relying on image recognition are becoming an ineffective hurdle against sophisticated bots.

2. Text and Audio Interpretation

CAPTCHAs also use distorted text and audio challenges to confuse bots. However, advances in character recognition have made it easy for AI to decipher distorted or blurred text with impressive accuracy. Such technologies enable bots to read text CAPTCHAs faster than humans can.

Similarly, audio CAPTCHAs, which are intended for visually impaired users, have become vulnerable to AI systems that can transcribe spoken words or digits. With advancements in speech recognition technology, AI can listen to distorted or sped-up audio, accurately transcribe it, and solve a CAPTCHA.

3. Speed and Scale

AI’s ability to work at scale is another factor that makes CAPTCHA increasingly obsolete. A human may solve a CAPTCHA within a few seconds, but AI can process thousands of CAPTCHAs simultaneously, and faster than any human could. This scale gives attackers a distinct advantage, especially when conducting credential stuffing attacks or other large-scale attempts to bypass security measures.

4. Behavioral Spoofing

Instead of solving the CAPTCHA directly, bots now use AI to mimic human-like behavior so precisely that CAPTCHA challenges might not even trigger. AI agents are starting to be used to simulate cursor movements, typing cadence, and timing between actions. Spoofing fingerprint data (e.g., device characteristics, sensor noise) can be used to pass bot detection thresholds. An example is AI-enhanced Playwright bots paired with synthetic browser fingerprints.

AI Augmented by CAPTCHA Farms

Beyond AI-driven attacks, CAPTCHA farms where low-paid workers manually solve CAPTCHA challenges have been reducing the efficacy of this defense mechanism. Even if AI struggles with certain types of CAPTCHA (which is becoming less frequent), cybercriminals can still employ click farms based on human labor offered by companies such as 2CAPTCHA to quickly bypass security barriers.

CAPTCHA farms and AI augmentation are used together. In this scenario, AI systems are likely the default method to solve CAPTCHAs, and human solvers are brought in only when necessary. This collaboration maximizes efficiency, accuracy, and cost.

How AI’s Multi-Modal Capabilities Are Exploiting CAPTCHA Weaknesses

The key advantage of multi-modal AI is its ability to combine insights from different types of data inputs. CAPTCHA tests often rely on just one form of input at a time (visual, audio, or text). Multi-modal AI can synthesize multiple streams of information analyzing text, images, and even audio data simultaneously to interpret CAPTCHA challenges in ways traditional bots could not.

For example, if a CAPTCHA displays an image with distorted text and provides an audio version for accessibility, multi-modal AI could process either the visual, and auditory inputs to solve the puzzle. This dual capability gives AI a significant edge in breaking through barriers.

Why CAPTCHA’s Failures Matter

The diminishing effectiveness of CAPTCHA poses serious implications for online security. CAPTCHA has been widely deployed across hundreds of thousands of websites, apps, and API-based services to prevent bots from engaging in harmful activities like scraping content, spamming forums, and launching credential stuffing attacks. As CAPTCHA becomes easier to bypass, the Internet becomes even more vulnerable to automated fraud and abuse.

The cost of CAPTCHA failures is substantial:

- Account Takeovers (ATOs): Attackers can use AI to quickly bypass CAPTCHAs and carry out credential stuffing attacks, leading to account breaches and brand damage.

- Fraud and Abuse: Bots can flood online services with fake sign-ups, committing checkout fraud, skewing data, increasing costs, and overwhelming resources.

- Diminished User Experience: As CAPTCHAs become less effective, organizations might introduce even more complex challenges (such as rotating image CAPTCHAs), frustrating legitimate users without significantly deterring attackers.

CAPTCHA Bypass in Practice

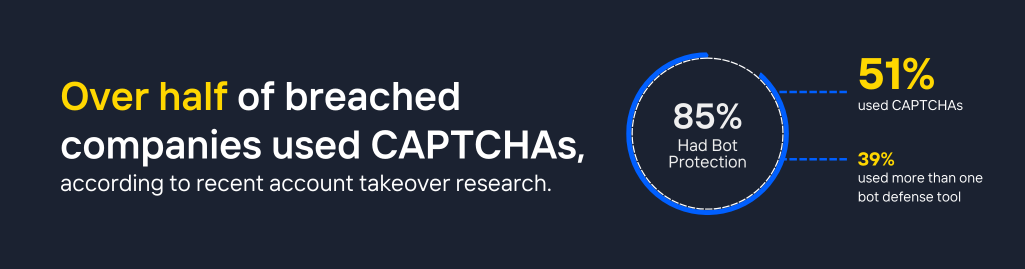

Kasada’s 2025 ATO Trends Report recently studied 22 credential stuffing groups. These groups targeted a total of 1,027 large organizations, compromising 6.2 million customer accounts across a range of industries including retail, eCommerce, entertainment, food, and travel.

Of the 1,027 total companies, more than 500 companies that were breached relied on CAPTCHAs as the primary or part of a multi-layered approach to defend against automated threats such as credential stuffing. Bots armed with AI-enabled CAPTCHA bypass were identified as a key evasion tactic, often coupled with open-source credential stuffing tools such as OpenBullet.

Invisible Challenges as an Alternative to CAPTCHA

As multi-modal AI renders CAPTCHA ineffective, Kasada offers a modern solution with invisible challenges that operate behind the scenes. These challenges combine a secure browser environment, invisible signal collection, and fast ML-based detections to identify bots without relying on analyzing behaviors that AI can easily evade. Invisibility helps to make defenses less vulnerable to AI advancements. Plus, the user experience is never interrupted making them a far better user experience than CAPTCHA.

As CAPTCHA is no longer a reliable security measure, Kasada’s invisible challenges represent the next generation of bot defense, providing robust security while maintaining a frictionless experience for legitimate users.

Bot Defense Beyond CAPTCHAs

With recent advancements in AI systems capable of easily solving visual, text, and audio challenges, CAPTCHA can’t offer the level of protection it did when conceived. Organizations must adapt by implementing more dynamic and robust security measures to stay ahead in the battle against automated threats. The focus must shift from reactive defenses to proactive strategies that evolve as fast as the threats themselves, especially as multi-modal and agentic AI advances

Talk to an expert to learn more about Kasada is transforming bot defense with a radical approach that makes our protection quick to evolve, difficult to evade, and invisible to customers.