A paradigm shift in bot detection

The traditional data used to differentiate between humans and bots can no longer be trusted. This new paradigm creates a major problem for traditional anti-bot providers and their customers who pay handsomely to be protected from bot attacks.

The botting community has mastered the “Know thy enemy and know yourself; in a hundred battles, you will never be defeated” approach.

The key to defeating an anti-bot provider is to understand what data they want to see. Once bot operators understand this, they can construct a bot to deliver that data, exactly as it’s expected.

Sun Tzu would’ve built a wicked botting operation.

So, let’s apply a bit of this logic in the reverse to see what we can discover.

The marketplace for bypasses

The challenge for anti-bot providers is that the botting community is built upon highly innovative, intelligent individuals who continually develop bypasses for most of the established bot detection technologies.

Despite the marketing messaging of the CDNs, browser and TLS fingerprint data, device metrics, and machine learning are no longer effective ways to mitigate sophisticated bots. In reality, the complex machine learning algorithms that power many of the leading anti-bot solutions are actually corrupted with fake data, and therefore, should no longer be trusted.

The limited lifecycle of an anti-bot bypass technique is what drives the continuous innovation cycle within the botting community.

The commercial value of a particular bypass technique is impacted by:

- The uniqueness of the technique,

- The websites that it can be used on, and

- The presence of a marketplace

“Wanna buy a bypass?” – Cracker in a marketplace selling bypasses to anti-bot solutions.

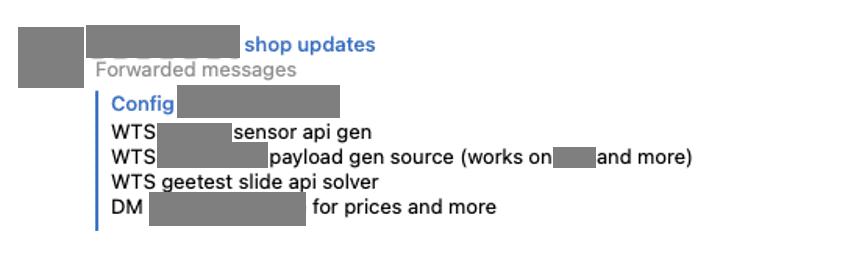

The cloudification of bypasses

As previously documented, the eCommerce botting community has recently undergone a transformation with the evolution of solver API services. These cloud-based APIs are designed to maintain the secrecy of their bypasses. Offering a bypass as an API allows the bot to protect their IP (i.e.: the code required to defeat the anti-bot providers) and their revenue stream.

The operational overhead of these API platforms is significant, and thus, the cost to access the bypass ranges from $100 to $10,000 a month. Once a bypass becomes mainstream, its commercial value plummets to the point that most developers open source their code. This is where things get interesting – and somewhat concerning.

Bot detection bypasses and fraud groups

Once a bypass technique is open sourced, the knowledge transfers to a broader subset of the communities, including fraud actors, account and credit card crackers, and rewards fraud groups.

The act of monetising the output of an illegal botting operation is a degree harder for this community.

“Anyone want to buy access to someone else’s loyalty account?”

The act of buying and selling in this community is morally, ethically, and criminally corrupt.

It presents various challenges, as fraudsters need online communities to sell their stolen goods. Maintaining a presence on a social network when your primary purpose is to sell illegal goods is a significant undertaking. Whereas Discord is the platform of choice in the sneaker botting world, their AUP forces the crackers onto hacker forums and messenger apps.

These marketplaces enable the fraudsters to trade goods and services, including:

- Configurations to cracking tools – self-serve account cracking (BYO tool and stolen credentials)

- Bypass techniques to be used in cracking tools – code and/or third-party API

- Previously cracked accounts

- Previously cracked accounts with credit card details (Premium)

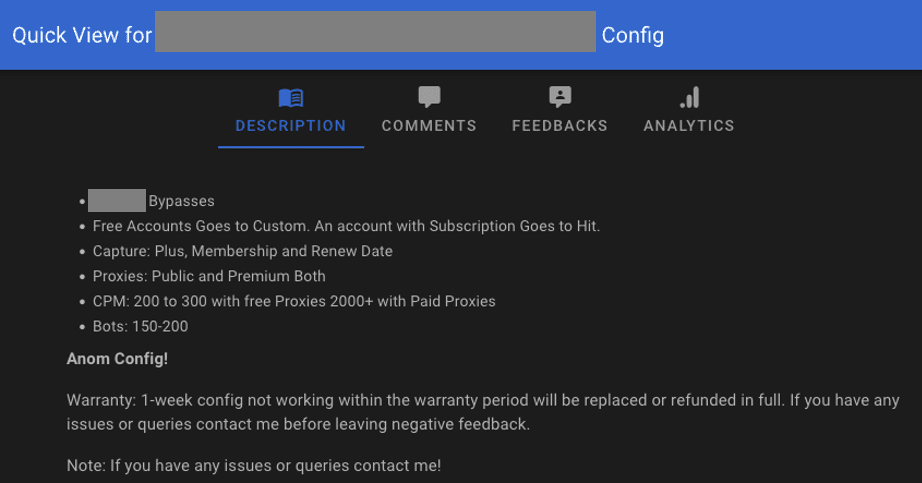

Example of a config

Config cost: $36.62

This configuration enables a user to launch their own attack against your application. This allows users to continually farm accounts, creating a revenue stream of stolen account credentials.

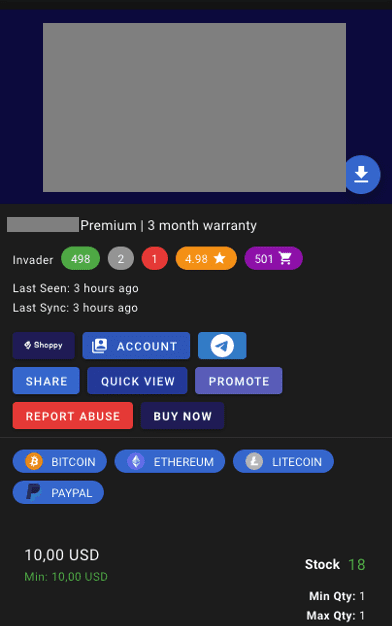

Example of accounts for sale

Cost: $10.00 per cracked account

The “product” being sold here is access to another person’s account. In most cases, the legitimate owner of this account has reused their username and password and has not opted for 2-factor authentication.

What is the threat intel telling us?

First and foremost, the presence of threat intelligence about your applications, websites, or user accounts in a fraud community marketplace acts as an indicator of compromise.

A cracking tool configuration for your web or mobile app indicates that someone has successfully used an automation tool to launch an attack against your application. The act of selling the config suggests that multiple actors will automatically have access to the attack technique.

An actor selling access to valid accounts on your application means that someone has successfully attacked your application and harvested your customers’ account credentials. These hijacked accounts are used for a variety of purposes, depending upon the functionality of the application.

The presence of an account registration service suggests that a fraudster can automate the generation of accounts on your application.

So what?

Actionable intelligence is defined as “information that can be followed up on, with the further implication that a strategic plan should be undertaken to make positive use of the information gathered.”

The presence of cracking configs, stolen user accounts, or mass registration services clearly signals that fraudsters are using bots to defeat your security controls. For customers that invest in anti-bot solutions, these signals suggest that attackers have bypassed your defenses or found an unprotected endpoint.

A case study for why threat intelligence matters

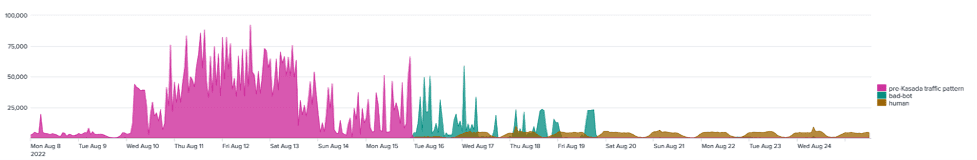

Kasada recently reached out to an organisation that was being targeted by the cracking community. The company in question operates an eCommerce website, which allows users to purchase gift cards as well as the ability to purchase goods with gift cards.

This website was being targeted by fraudsters who were using credential abuse attacks to launder money. Once an account was cracked, the fraudsters purchased e-gift cards, which were then used to purchase goods.

The traffic patterns seen below tell the story. Before Kasada was in place, the volume of attack traffic was substantial as their anti-bot defenses were regularly bypassed. After Kasada moved to protect mode and the attacks stopped, fraudsters started to disappear, with all attack traffic subsiding within 5 days.

As a result, cracked accounts on the marketplace have ceased through monitoring fraud claims as well as our own threat intelligence monitoring of these secondary marketplaces.

What you can do now

If you currently invest in an anti-bot solution, here are four critical questions for you to ask:

- How does the provider guarantee that bots cannot feed fake data to corrupt their data sets?

- What steps have they taken to prevent adversaries from reverse engineering their detection logic?

- How often do they make changes to their bot detection scripts and algorithms?

- How do they use threat intelligence to identify bypass techniques?

Request a personalized threat intel report to find out if your organization has any indicators of compromise.

“Wanna buy a bypass?” – Cracker in a marketplace selling bypasses to anti-bot solutions.

“Wanna buy a bypass?” – Cracker in a marketplace selling bypasses to anti-bot solutions.