Traditional Bot Management grew out of Web Application Firewalls

Web Application Firewalls (WAFs) were one of the first tools available to protect web applications, including the vulnerabilities and risks considered the most critical, inclusive but not limited to the OWASP Top Ten web application security risks.

As the primary tool available within the toolbox at the time, Web Application Firewalls (WAFs) were also one of the first tools used to block bad bots by creating WAF rules based on reputation, assigning risk scores based on the historical behavior of IP addresses. Basic mitigating actions such as blocking or applying rate controls were used to stop or manage questionable IPs.

It didn’t take long, however, before bot operators figured out how to work around these WAF defenses. For example, they would hide behind proxies, distributing IPs while conducting “low and slow” attacks. As a result, a new category called Bot Management emerged to fill the gap that existed for more advanced capabilities to detect and manage bot attacks.

Complexity Breeds Fragility

WAF rules are notoriously difficult to configure and update, and they are subject to high false positive rates. Thus, the majority of bot management products that developed as an evolution of WAF inherited the same challenges. This is why so many WAFs are kept in monitor-only mode and require ongoing maintenance to add rules when new vulnerabilities and exploits are identified. While WAF providers attempt to make this easier with “out-of-the-box” configurations, they still require ongoing updates and maintenance over time.

So the origins of bot management, derived from WAF, are fundamentally anchored by the need to customize WAF rules and assign risk scores, and it has carried forward to bot detection. In essence, configuring and managing bot traffic has been stacked on top of the same complexity required for protecting web applications from vulnerabilities.

The Need for a New Engine

Similar to how WAFs became ineffective for stopping bad bots, adversaries also figured out how to bypass the first generation of bot management systems. Its foundation – rules to create, risk scores to assign – is a fatal flaw when it comes to mitigating bots. Bot operators have figured out more advanced methods for how to look like humans, by leveraging residential proxy networks and using aged accounts – while also acting like humans, by using script recorders and other recordings of human gesturing.

The result? It has become a fool’s game trying to identify bad IPs and search for behaviors that look suspect. Even machine learning (ML) takes time to identify, and ML can be easily tricked as bot operators feed systems with suspect data.

Overall, with regards to stopping bad bots, this is often referred to as the “whack-a-mole” game, where adversaries adapt in seconds and defenders are stuck reacting with a toolset that isn’t able to adapt in a way that’s quick and agile enough to be effective. Consequentially, sophisticated bots get through, resulting in a huge false negative problem.

Just like WAF, the more stringent risk scores are made to tighten security, the more false positives arise. So not only are bad bots getting through, but the user experience is greatly compromised, which harms key business objectives such as online conversion rates.

The top OWASP Automated Threats (OAT) include credential stuffing, carding, inventory denial, and web scraping. Given what’s in the news these days, it’s clear that these threats still remain a big problem – even for organizations that have already deployed bot mitigation solutions due to the ineffectiveness of these tools.

A Fresh Start

Had bot mitigation not grown out of the WAF market 10 years ago, would things look different? For newer entrants to the anti-bot market, the answer is a resounding yes. It’s an opportunity for a fresh start and new thinking to approach the problem of detecting and stopping bots in a modern way. And what if addressing the WAF market could be an offshoot of bot management, as opposed to the other way around?

Automation: The Common Denominator

The common denominator to all automated threats is… automation itself. Automation is cheap, easy to use, and makes massive profits viable. So instead of looking for bad IPs and behaviors, what if you instead looked for traces of automation itself? If you’re good enough at identifying it, traces of automation present themselves whenever bots interact with websites, mobile apps, and APIs.

Similar to how network access is shifting to zero trust, what if bot mitigation applied the same philosophy? In other words, assume every request is guilty until proven innocent. And for a request to prove its innocence, it would need to demonstrate that it works within the constraints of a legitimate browser or mobile app and that no traces of automation are detected. In doing so, you could eliminate the need for WAF rules and risk scoring. A binary decision – either it’s a bot or a human.

If it were simple to make this binary decision, everyone would be doing it. But client-side interrogation techniques (note: not fingerprinting), when done right, can be extraordinarily precise when it comes to identifying the presence of automation. Advanced attributes can be collected from the client looking for strong indicators of automation including the use of headless browsers and automation frameworks such as Selenium, Puppeteer, and Playwright – including even highly customized versions of these frameworks that are iterated upon within open-source communities in an attempt to evade detection, such as Puppeteer Extra Stealth.

Bot or not decisioning based on automation itself are made on the first request, without ever letting requests into your infrastructure, including those from new bots never seen before. As bot operators change their methods, the presence of automation continues to exist.

Many OWASP Top 10 Web Application Security Risks Require Automation Too

Guess what… the majority of application risks and vulnerabilities suited for today’s WAFs are also exploited using automation. Unwanted vulnerability scanners, and many of the OWASP top web application security risks (such as injection, broken authentication, and access control), as well as known vulnerabilities, are left exposed by a WAF’s need for ongoing rule updates and its inability to accurately detect the presence of automation.

Take SQL injection as an example. For someone to be successful, they either need to check everything manually, which is highly impractical, or they leverage automation to test all of the strings en masse. In principle, this is very much how a credential-stuffing attack is orchestrated, but with strings instead of usernames and passwords. With a new zero-day application vulnerability, adversaries will leverage automated tools to test thousands of URLs to see which systems haven’t been patched and make for an easy target.

While there are – and will always be – application exploits that don’t require automation, using a modern bot mitigation tool that is able to detect the presence of automation can serve as a primary defense layer, as it relates to solving many of the high priority tasks that WAF continues to struggle to protect against.

The Future of Application Security

Forrester Research recently predicted that Bot Management will overtake traditional WAF and that many of WAF’s core functions will be assumed by bot management, enabling it to overtake traditional WAF as the core application protection solution by 2025.

“Bot management will overtake traditional WAF: We also predict that many of WAF’s core functions will be subsumed by bot management, enabling it to overtake traditional WAF as the core application protection solution by 2025. Bot management detects and prevents a range of bot-based attacks, including credential stuffing, web scraping, inventory hoarding, and influence fraud. Bot management tools protect applications from bad bots while allowing good bots and ensuring that human users are not stymied by unnecessary captchas and challenges.”

– Sandy Carielli, Forrester Principal Analyst

We love this prediction, but what will it take for this to happen in a way that fundamentally changes the tools available and increases the effectiveness to accurately protect web applications?

Make AppSec Easy, Effective, and Invisible

In order for Bot Management to overtake traditional WAF as the core protection solution for websites, mobile apps, and APIs, our vision at Kasada is to make AppSec easy, effective, and invisible.

- Easy: the whack-a-mole game that exists today is a symptom of WAF rules and risk scoring. Protection should be realized without WAF rules or risk scores. Security and IT teams should not have to make the tradeoff of better security at the expense of more false positives. Ongoing maintenance required should be minimal, if any at all.

- Effective: it is inevitable that bot operators will continue to adapt to evade detection, and your application security defenses must be able to adapt in real-time in order to be effective and accurate.

- Invisible: ineffective methods, such as CAPTCHA, ruin the customer experience. CAPTCHA is essentially a decision avoidance from the security provider that places the burden of validating on the user. CAPTCHAs and other proposed methods like hardware keys that add friction to the user experience need to be avoided.

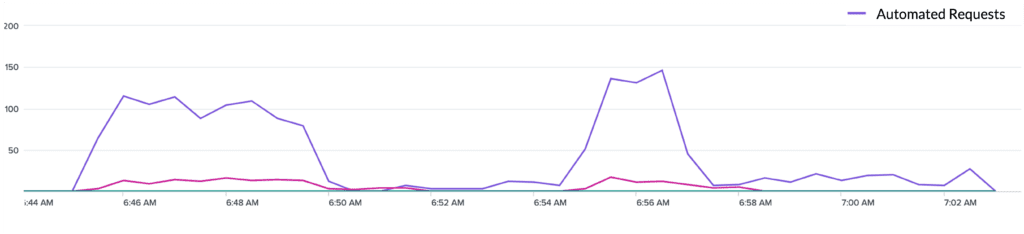

A Recent Example: Scanning for Injection Vulnerabilities

A customer’s website was recently interrogated by an unwanted automated vulnerability scan using the Acunetix tool. The IP address used to conduct the scan was determined to be hidden behind a residential proxy network so blocking based on reputation would not have been effective. In minutes, it scanned for injection vulnerabilities by injecting SQL strings and many variants against multiple pages of the application, triggering several thousand events.

Kasada detected and blocked this injection attack in real-time from the first request, using client-side interrogation that detected the automation toolkit used to conduct the scan itself. While it is possible a WAF could have stopped this same scan as well, it would need to have had the proper rules in place to block it.

Request a demo to learn how our modern approach to detecting malicious automation stops the bot attacks others can’t while adding a defensive layer that offloads the cost and complexity associated with WAFs.